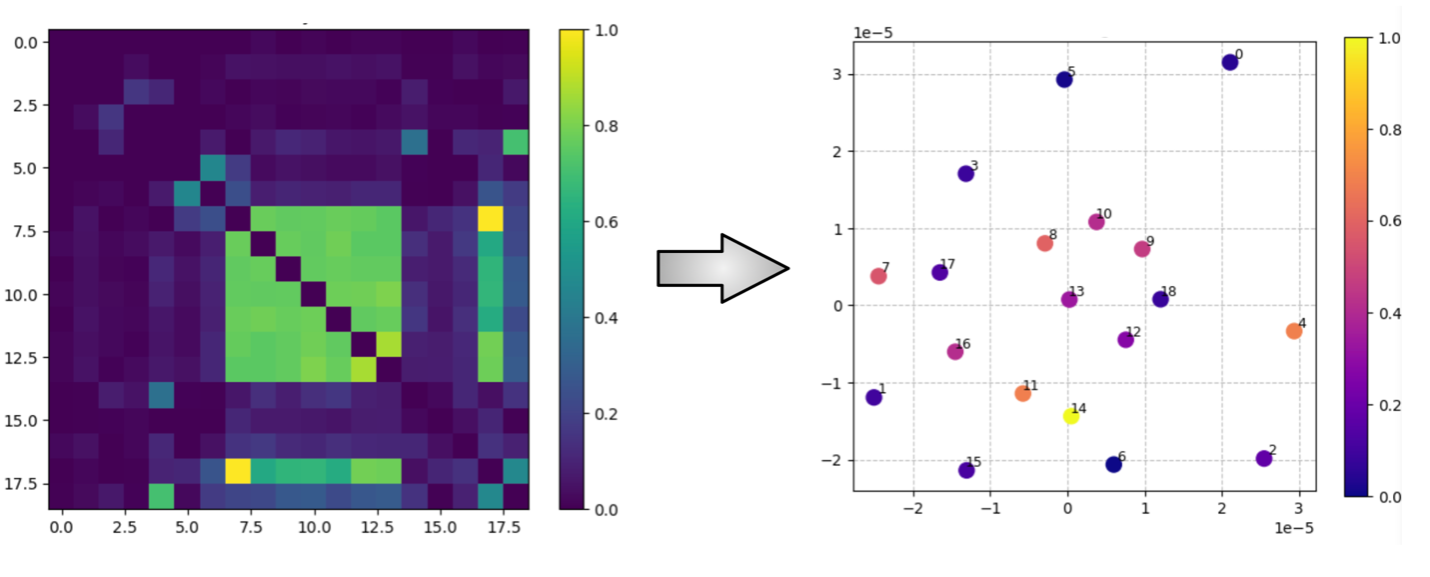

Figure 1. Mapping redundancy onto atom positions. Highly redundant features are placed within blockade range, while independent ones remain spatially separated.

Analog Quantum Feature Selection with Neutral Atoms

From Classical Feature Selection to Analog Quantum Optimization

In machine learning, feature selection searches for the smallest subset of variables that preserves predictive accuracy while avoiding redundancy. Since each feature can be included or excluded, the search space grows exponentially (2N) the problem into the NP-hard category. Classical heuristics such as greedy search or mutual-information ranking approximate this process but fail to scale efficiently or capture feature dependencies.

The task can be formulated as a Quadratic Unconstrained Binary Optimization (QUBO) that balances relevance—information each feature shares with the target—and redundancy—information shared among features:

where 𝑧𝑖∈{0,1} indicates whether feature i is selected.

Rather than solving this QUBO digitally, we implement it physically using arrays of neutral atoms in Rydberg states on Amazon Braket’s Analog Hamiltonian Simulation (AHS) framework [1].

Feature relevance is encoded as local atomic detuning, while redundancy is mapped to Rydberg interactions that depend on interatomic distance. During adiabatic evolution, the system’s natural dynamics guide it toward low-energy configurations that represent the optimal subsets—turning feature selection into a continuous analog quantum optimization process executed directly by the quantum hardware.

Analog Quantum Computation as Natural Feature Selection: The Rydberg Blockade

This same optimization balance appears naturally in the physics of neutral atoms excited to Rydberg states. When two atoms are closer than a critical distance known as the Rydberg blockade radius, they cannot be simultaneously excited because of strong dipole–dipole interactions. This blockade mechanism mirrors the principle of redundancy control in feature selection: if two variables carry overlapping information, they should not be selected together.

In our analog quantum formulation, each atom represents a feature. Feature relevance is encoded as a local detuning bias that favors excitation, while redundancy is encoded through distance-dependent interactions—atoms representing correlated features are placed within blockade range, so the excitation of one suppresses the other. Independent features are separated beyond the blockade distance, allowing simultaneous excitation.

Thus, the system’s own physical dynamics perform feature selection: it evolves toward low-energy configurations that naturally minimize redundancy and favor relevant features. Rather than searching combinatorially, the array of Rydberg atoms self-organizes into the optimal subset through its intrinsic quantum behavior.

To translate data correlations into spatial structure, we construct a redundancy matrix from pairwise mutual information and embed it into two dimensions via multidimensional scaling (MDS). The resulting atomic layout defines interaction strengths through the van der Waals potential Vij ∝ 1 / |𝑥⃗ᵢ − 𝑥⃗j|⁶. Local detunings encode feature relevance, while the distances implement redundancy constraints, creating a direct physical map from statistical dependencies to measurable atomic interactions.

Results on Benchmark Datasets

We evaluated our analog quantum feature selection (QFS) approach on three publicly available binary classification datasets with distinct redundancy profiles and variable types:

Adult Income [2] — Contains 48,842 samples and 14 features, combining numerical and categorical variables related to demographics, education, and occupation.

Bank Marketing [3] — Includes 45,211 samples and 16 input features describing client attributes and campaign interactions from a Portuguese bank. The goal is to predict whether a customer will subscribe to a term deposit.

Telco Churn [4] — Comprises 7,043 samples and 19 features describing telecom service usage, contracts, and customer demographics. The target is to predict whether a customer will leave the company.

The table below summarizes the main outcomes obtained using the XGBoost classifier on the three benchmark datasets. In all cases, the analog quantum feature selection (QFS) method achieves high AUC values while using a significantly smaller number of variables than the original datasets.

This behavior highlights the ability of the proposed neutral-atom quantum-processor encoding to perform an efficient and physically grounded feature selection, easily scalable to beat classical solutions, where the system dynamics naturally isolate the most relevant and non-redundant features without compromising predictive performance.

| Dataset | Original Features | QFS Features | AUC (QFS) | AUC (Baseline) | Reduction (%) |

|---|---|---|---|---|---|

| Adult Income | 14 | 2 | 0.857 | 0.836 | 86% |

| Bank Marketing | 16 | 4 | 0.912 | 0.892 | 75% |

| Telco Churn | 19 | 3 | 0.815 | 0.809 | 84% |

These impressive results establish a path towards computational quantum advantage and early industrial quantum usefulness when running our quantum computing solutions on commercial quantum processors provided by QuEra, Pasqal, Planqc, Atom Computing, and Infleqtion.

References

[1] Amazon Web Services, Inc. (n.d.). Analog Hamiltonian Simulation (AHS). In the Amazon Braket Developer Guide. Retrieved October 24, 2025, from https://docs.aws.amazon.com/braket/latest/developerguide/braket-analog-hamiltonian-simulation.html

[2] Becker, B. & Kohavi, R. (1996). Adult [Dataset]. UCI Machine Learning Repository. https://doi.org/10.24432/C5XW20.

[3] Moro, S., Rita, P., & Cortez, P. (2014). Bank Marketing [Dataset]. UCI Machine Learning Repository. https://doi.org/10.24432/C5K306.

[4] Kaggle. (n.d.). Telco Customer Churn [Dataset]. Kaggle. Retrieved October 24, 2025, from https://www.kaggle.com/datasets/blastchar/telco-customer-churn/data