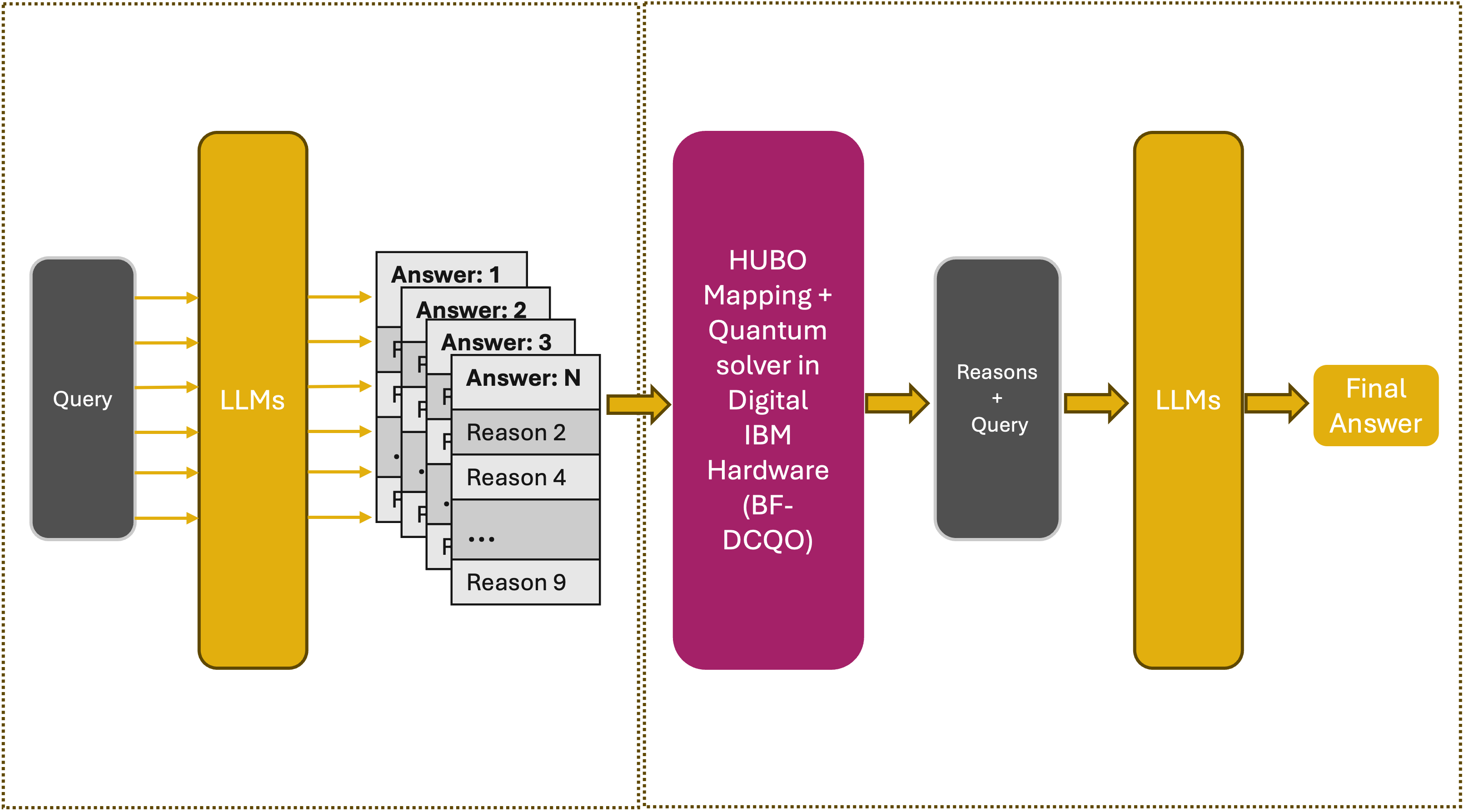

Figure 1. Pipeline of QR-LLM: reasoning fragments extracted from multiple LLM completions are mapped into a HUBO formulation and solved with a quantum optimizer (BF-DCQO), producing the final answer.

Quantum-Reasoning LLM (QR-LLM): Emergence of Quantum Intelligence

03.10.2025

From Chain-of-Thought to Combinatorial Reasoning

As large language models (LLMs) become central to AI applications, a key challenge remains: how to make their reasoning capacity both more accurate, reliable, faster, and scalable. While chain-of-thought [1] prompting helps models explain their steps, it also produces redundant, inconsistent, and low-quality fragments. We address here this limitation by reformulating reasoning as a combinatorial optimization problem [2], which we solve with suitably designed and engineered hybrid classical-quantum workflows involving digital, analog, or digital-analog quantum computing encodings. From this point of view, our QR-LLMs can be implemented with a variety of homogeneous or heterogeneous individual, iterated, or sequential runs on commercial quantum processors like IBM, IonQ, QuEra, Atom Computing, Pasqal, Quantinuum, Rigetti, D-Wave, IQM, Xanadu, and Origin Quantum, among many others.

In our approach, each reasoning fragment, or “reason”, extracted from multiple GPT-4-1 completions is treated as a binary decision variable: either it is selected or discarded. By combining these fragments into a Higher-Order Unconstrained Binary Optimization (HUBO) Hamiltonian formulation, we explicitly capture the individual importance (linear terms), pairwise consistency (quadratic terms), and higher-order coherence (k-local terms). This optimization is far more expressive than the majority voting or simple filtering methods. It ensures that the final answer is built from the most relevant and diverse reasons, avoiding redundancy and improving interpretability.

Why Quantum Computing Matters?

The power of this method comes with a cost: as the number of candidate reasons grows, the HUBO Hamiltonian quickly becomes dense. With just 120 reasons, we already face ~7,000 pairwise interactions and ~280,000 triplets; moving to k-body terms (k>>1) makes the problem explode in complexity. Classical solvers like simulated annealing collapse in runtime under this growth, struggling to navigate flat, degenerate energy landscapes.

This is where quantum solvers come in. We employ our proprietary Bias-Field Digitized Counterdiabatic Quantum Optimization (BF-DCQO) [3], which smoothly runs on today’s digital quantum hardware, like IBM and IonQ, and can operate with high k-body Hamiltonian terms. Presently, IBM’s quantum systems are the only commercial quantum processors where we can scale up to 156 reasons (156 physical qubits). With next generations, along IBM’s roadmap, including the preannounced NightHawk 2D chips and their modular Flamingo architectures [4], we expect to solve each time more complex tasks and question prompts. With current quantum processors, we are currently identifying the class of complex questions that would unavoidably require reaching quantum-advantage level in our quantum algorithms, giving rise to the era of Quantum Intelligence.

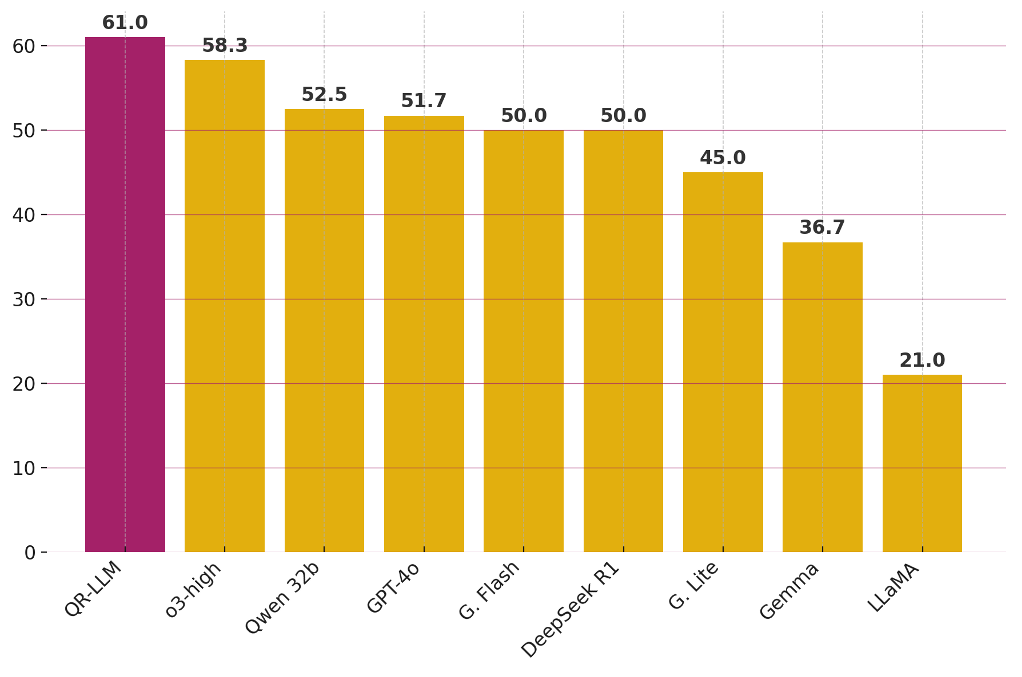

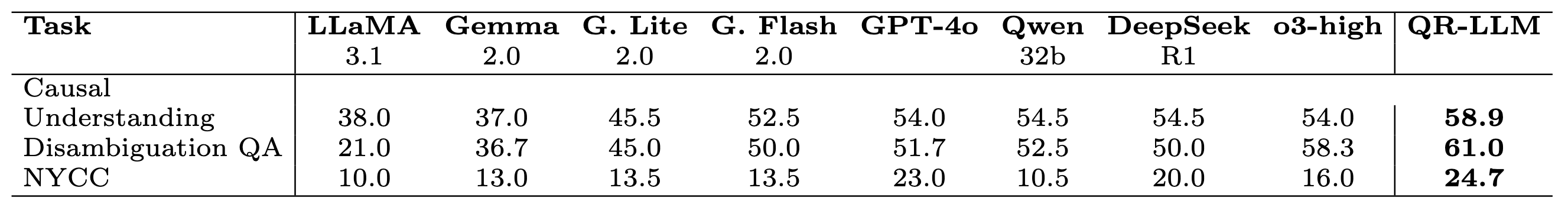

Benchmarking Results

We tested this framework against some of the most demanding BBEH benchmarks [5] for reasoning: Causal Understanding, DisambiguationQA, and NYCC. These datasets are widely regarded as essential for evaluating new LLMs, as they probe multi-step inference, semantic disambiguation, and conceptual combination.

Our results show that our Quantum Combinatorial Reasoning outperforms reasoning-native models, such as OpenAI o3-high:

- +4.9% in Causal Understanding.

- +2.7% in DisambiguationQA.

- +8.7% in NYCC.

This establishes a clear quantum-advantage trajectory for strategic and complex LLM reasoning.

Towards Quantum Intelligence

- Order and structure of reasons: combining HUBO with Tree-of-Thoughts [6] to capture sequential dependencies.

- Ranking of reasons: moving beyond binary selection to importance-weighted outputs.

- Quantum Intelligence: exploiting k-body interactions to model reasoning at very high relational levels where quantum advantage is a sine qua non condition.

- Hierarchical reasoning: hybrid pipelines where simple questions are solved classically, while complex, multi-hop problems invoke quantum solvers.

- Sequential architectures on heterogeneous hardware: leveraging hybrid pipelines that combine CPUs, GPUs, and quantum processors to deliver more complex answers across diverse computational backend for quantum-intelligence industrial applications.

This roadmap points toward a new paradigm: Quantum Intelligence (QI), where quantum optimization will be able to progressively and incessantly augment quantum-reasoning LLMs. The consolidation of QI, as the next step of classical AI, will allow us to solve reasoning tasks once considered out of reach for classical methods, outperforming the reasoning capabilities of human brains/minds. Therefore, it is currently unpredictable to guess how QI may shake humanity and where it will place our technological societies in a troubled world.

Bibliography

[1] WEI, Jason, et al. Chain-of-thought prompting elicits reasoning in large language models. Advances in neural information processing systems, 2022, vol. 35, p. 24824-24837.

[2] Esencan, Mert, et al. "Combinatorial reasoning: selecting reasons in generative AI pipelines via combinatorial optimization." arXiv preprint arXiv:2407.00071, 2024.

[3] CADAVID, Alejandro Gomez, et al. Bias-field digitized counterdiabatic quantum optimization. Physical Review Research, 2025, vol. 7, no 2, p. L022010.

[4] IBM QUANTUM. How IBM will build the world’s first large-scale, fault-tolerant quantum computer. https://www.ibm.com/quantum/blog/large-scale-ftqc , 2025.

[5] KAZEMI, Mehran, et al. Big-bench extra hard. arXiv preprint arXiv:2502.19187, 2025.

[6] YAO, Shunyu, et al. Tree of thoughts: Deliberate problem solving with large language models. Advances in neural information processing systems, 2023, vol. 36, p. 11809-11822.